From Logic Theorist to GPT: How Has AI Evolved?

In a rapidly digitising world, Artificial Intelligence (AI) has emerged as a transformative force in business operations.

Artificial Intelligence (AI) has come a long way since its inception in 1956.

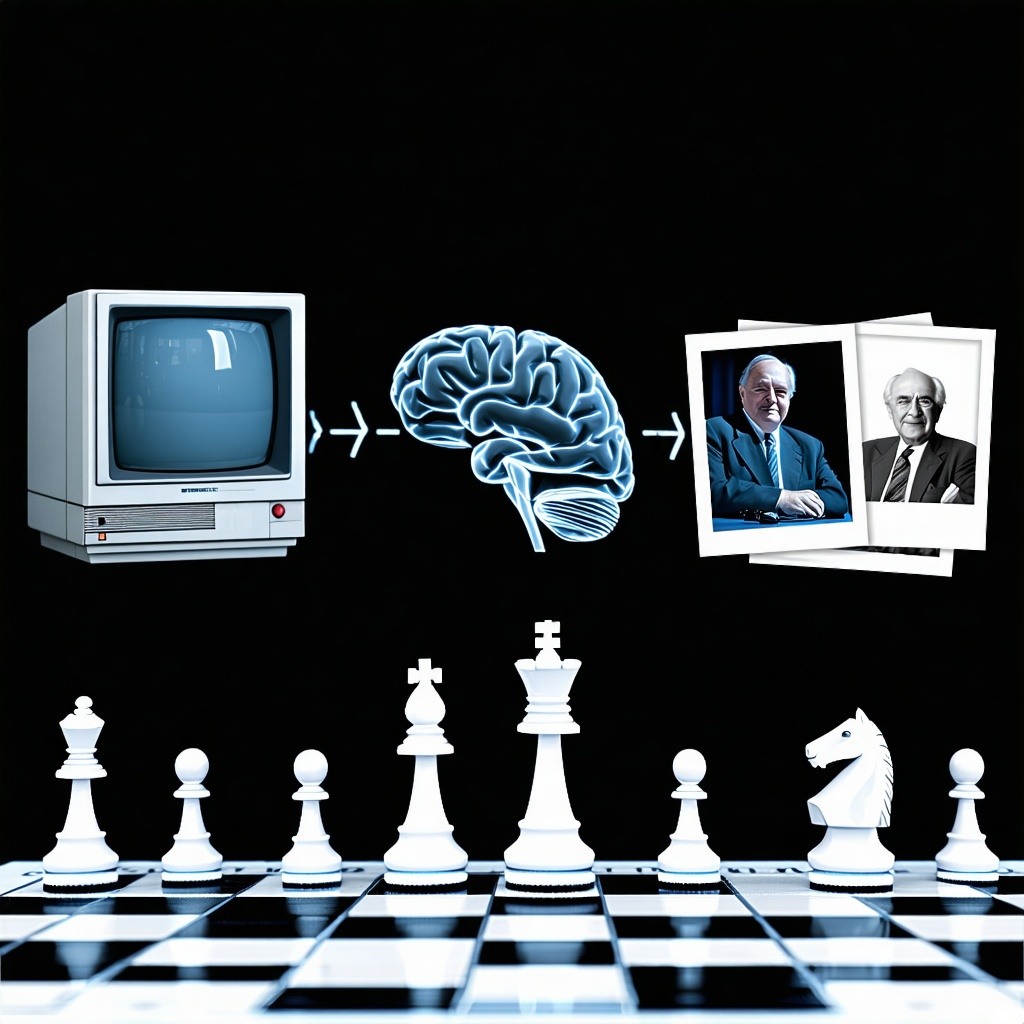

This journey began with pioneers like Alan Newell and Herbert Simon, who developed the Logic Theorist, the first program designed for automated reasoning. Often described as "the first artificial intelligence program," the Logic Theorist set the stage for decades of innovation.

By 1997, AI reached a significant milestone when IBM's Deep Blue defeated world chess champion Garry Kasparov. This event marked the potential of AI in tackling complex tasks.

- Neural Networks (NN): Initially, neural networks were limited in handling sequential data, which hindered their ability to capture context over time.

- Recurrent Neural Networks (RNN): Introduced to address this limitation, RNNs include loops that allow them to retain information from previous inputs, effectively creating a form of memory that captures context.

- Long Short-Term Memory (LSTM): LSTMs further improved on RNNs by introducing memory cells capable of maintaining information for extended periods. They control the flow of information, allowing the network to decide which information to keep, forget, or output along a time sequence.

The Rise of Transformers and Large Language Models (LLMs)

In 2017, transformers were introduced, marking a significant advancement in Large Language Models (LLMs). Differing from traditional supervised training in machine learning models, LLMs use unlabelled data versus labeled data, which is often expensive and hard to obtain. OpenAI has taken this to an 'extreme', leveraging the sheer scale of available text data on the internet. I'd imagine that the rational behind is that all languages can be broken down into combinations of words and phrases. While the way these combinations are used can be highly creative and culturally diverse, if a system can observe and analyse all texts, it can master all linguistic patterns, contexts and relationships, probably better than any human individual can.

This development is incredibly exciting! Achieving this level of sophistication requires not only the intricate design of the transformer models themselves, but also an enormous amount of compute power. This is where advanced computer chips, like those from Nvidia, play a crucial role. OpenAI's GPT models have been propelled forward by substantial venture capital investments, amounting to hundreds of millions of dollars, enabling them to get to where they are now.

However, the journey to mastering language with AI is not without its challenges. One fundamental, inherent, and inevitable issue that arises is hallucination. This refers to instances where the model generates information that, while plausible, is not accurate as the transformers are statistical in essence, and the internet data is not reliable.

Nevertheless, it is an unbelievable achievement by all means! While these LLMs are termed as "foundation models," there is a need for an additional AI agent layer to suppress hallucination, ensure accuracy, and execute tasks for commercial applications.